Watch two Mini Cheetah robots square off on the soccer field • ZebethMedia

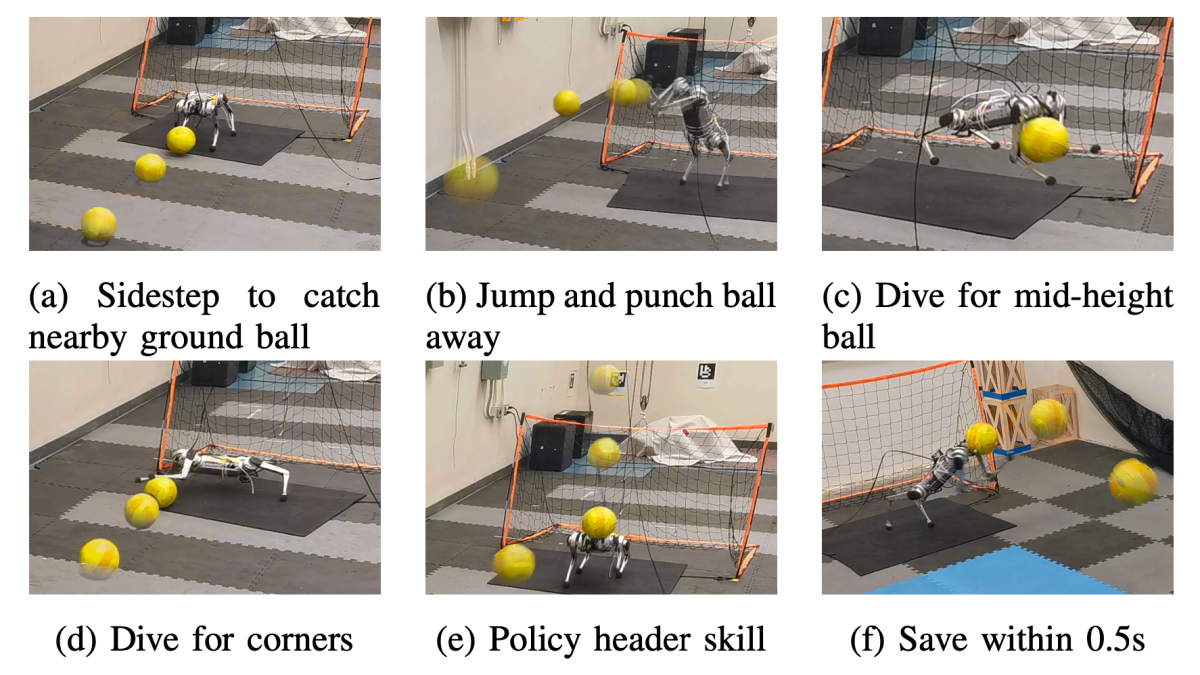

Some robotics challenges have immediately clear applications. Others are more focused on helping systems solve broader challenges. Teaching small robots to play soccer against one another fits firmly into the latter category. The authors of a new paper detailing the use of reinforcement learning to teach MIT’s Mini Cheetah robot to play goalie note, Soccer goalkeeping using quadrupeds is a challenging problem, that combines highly dynamic locomotion with precise and fast non-prehensile object (ball) manipulation. The robot needs to react to and intercept a potentially flying ball using dynamic locomotion maneuvers in a very short amount of time, usually less than one second. In this paper, we propose to address this problem using a hierarchical model-free RL framework. Image Credits: Hybrid Robotics Effectively, the robot needs to lock into a projectile and maneuver itself to block the ball in under a second. The robot’s parameters are defined in an emulator, and the Mini Cheetah relies on a trio of moves — sidestep, dive, and jump – to block the ball on its way to the goal by determining its trajectory while in motion. To test the efficacy of the program, the team pitted the system against both a human component and a fellow Mini Cheetah. Notably, the same basic framework used to defend the goal can by applied to offense. The paper’s authors note, “In this work, we focused solely on the goalkeeping task, but the proposed framework can be extended to other scenarios, such as multi-skill soccer ball kicking.”